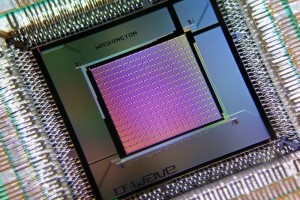

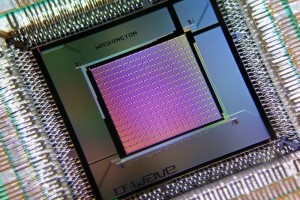

D-Wave 2X quantum computing

D-Wave Systems in British Columbia, Canada, is the only company in the world selling quantum computers, and it counts Google and NASA among its customers.

But after four years on the market there is still no clear evidence its machines can solve problems faster than ordinary computers.

Now the firm has announced the D-Wave 2X, and claims it is up to 15 times faster than regular PCs. However, outside experts contacted by New Scientist say the test is not a fair comparison.

The theory behind such computers, which exploit the weird properties of quantum mechanics, is sound. A device built using qubits, which can be both a 0 and a 1 at the same time, promises to vastly outperform regular binary bits for certain problems, like searching a database.

But putting that theory into practice has proved tricky, and though experiments show the D-Wave machines display quantum behaviour, it’s not clear this is responsible for speeding up computation.

The D-Wave 2X is the company’s third computer to go on sale, and features more than 1000 qubits – double the previous model. Other changes have reduced noise and increased performance, says D-Wave’s Colin Williams.

D-Wave put the machine through its paces with a series of benchmark tests based on solving random optimisation problems.

600 times faster

For example, imagine a squad of football players, all with different abilities and who work better or worse in different pairs. One of the problems is essentially equivalent to picking the best team based on these constraints.

D-Wave compared the 2X’s results against specialised optimisation software running on an ordinary PC, and found its machine found an answer between two and 15 times as quickly. And if you leave aside the time it takes to enter the problem and read out the answer, the pure computation time was eight to 600 times faster.

“This is exciting news, because these solvers have been highly optimised to compete head-to-head with D-Wave’s machines,” says Williams. “On the last chip we were head-to-head, but on this chip we’re pulling away from them quite significantly.”

An important wrinkle is that finding the absolute best solution is much more difficult than finding a pretty good one, so D-Wave gave its machine 20 microseconds calculation time before reading out the answer. The regular computers then had to find a solution of equivalent quality, however long that took.

This makes it less of a fair fight, says Matthias Troyer of ETH Zurich in Switzerland, who has worked on software designed to enable regular computers to compete with D-Wave. A true comparison should measure the time taken to reach the best answer, he argues. “My initial impression is that they looked to design a benchmark on which their machine has the best chance of succeeding,” he says.

It’s a bit like a race between a marathon runner and a sprinter, in which the sprinter goes first and sets the end point when she gets tired. The marathon runner will struggle to replicate her short-range performance, but would win overall if the race were longer. “Whether the race they set up is useful for anything is not clear,” says Troyer.

But Williams says D-Wave’s customers aren’t interested in the absolute best solutions – they just want good answers, fast. “This is a much more realistic metric.”

Fair comparison?

Questions have also been raised about the PC used in the tests. D-Wave used a single core on an Intel Xeon E5-2670 processor, but that chip has eight such cores, and most PCs have at least four. Multiple cores allow a processor to split up computation and get results faster, so D-Wave’s numbers should come down when compared with a fully utilised chip, says Troyer.

Communication between cores introduces some slowdown, so doubling the number of cores doesn’t double performance, says Williams. Even assuming zero slowdown, you’d need a massive computer to tackle the largest problems, he says. “You’d need 600 classical cores to match us at that scale.”

Other computing hardware might be better suited to a competition with D-Wave, says Umesh Vazirani of the University of California, Berkeley – graphical processing units (GPUs) are often used for large-scale parallel computation.

“The proper comparison would be to run simulations on GPUs, and in the absence of such simulations it is hard to see why a claim of speed-up holds water,” Vazirani says.

Williams says D-Wave is planning to publish GPU benchmarks in future.

In the end, the only thing that will prove D-Wave’s machines really are working quantum computers is a runaway performance boost on larger and larger problems, known as “quantum speed-up”. D-Wave explicitly says it is not claiming such a speed-up with these tests – a good sign, says Troyer.

A previous test in 2013 claimed a 3600-fold performance increase but was later discredited and D-Wave took the criticisms on board. “I think they are getting much more serious in the statements they make,” Troyer says.

Syndicated Content: Jacob Aron, New Scientist

Alun Williams